Singapore online safety bill must embed human rights

Women wearing face masks use their mobile phones as haze shrouds Singapore's central business district August 26, 2016. REUTERS/Edgar Su

Moves to regulate online safety and harmful content must not sacrifice the right of Singaporeans to freely express themselves online

Dhevy Sivaprakasam is the Asia Pacific policy counsel at Access Now

On Nov. 9, Singapore’s Parliament passed the Online Safety (Miscellaneous Amendments) Bill (Online Safety law) to “improve safety” and “combat harmful content” online. This law, along with two related regulations — the Content Code for Social Media Services (CCSMS) and the Code of Practice for Online Safety (CPOS) - will likely be implemented in 2023 to address content that incites violence, sexual abuse, self-harm, and harms to public health and security.

Singapore’s efforts reflect a global trend towards governmental content regulation. In proposing these measures, it referenced similar efforts in Germany, the United Kingdom, the European Union, and Australia.

However, these initiatives must not sacrifice the rights to freely express, share, and receive information online under the guise of safety. As former U.N. Special Rapporteur David Kaye has noted, “even rules designed with the best of intentions” can violate the rule of law if they are not clearly and comprehensively grounded in the principles of legality, legitimacy, necessity, and proportionality.

As a starting point, legality requires rules to be clearly defined so people who use the internet, internet intermediaries - including social media services - courts, and authorities know exactly what content is impermissible online. Only then can they enforce regulations fairly.

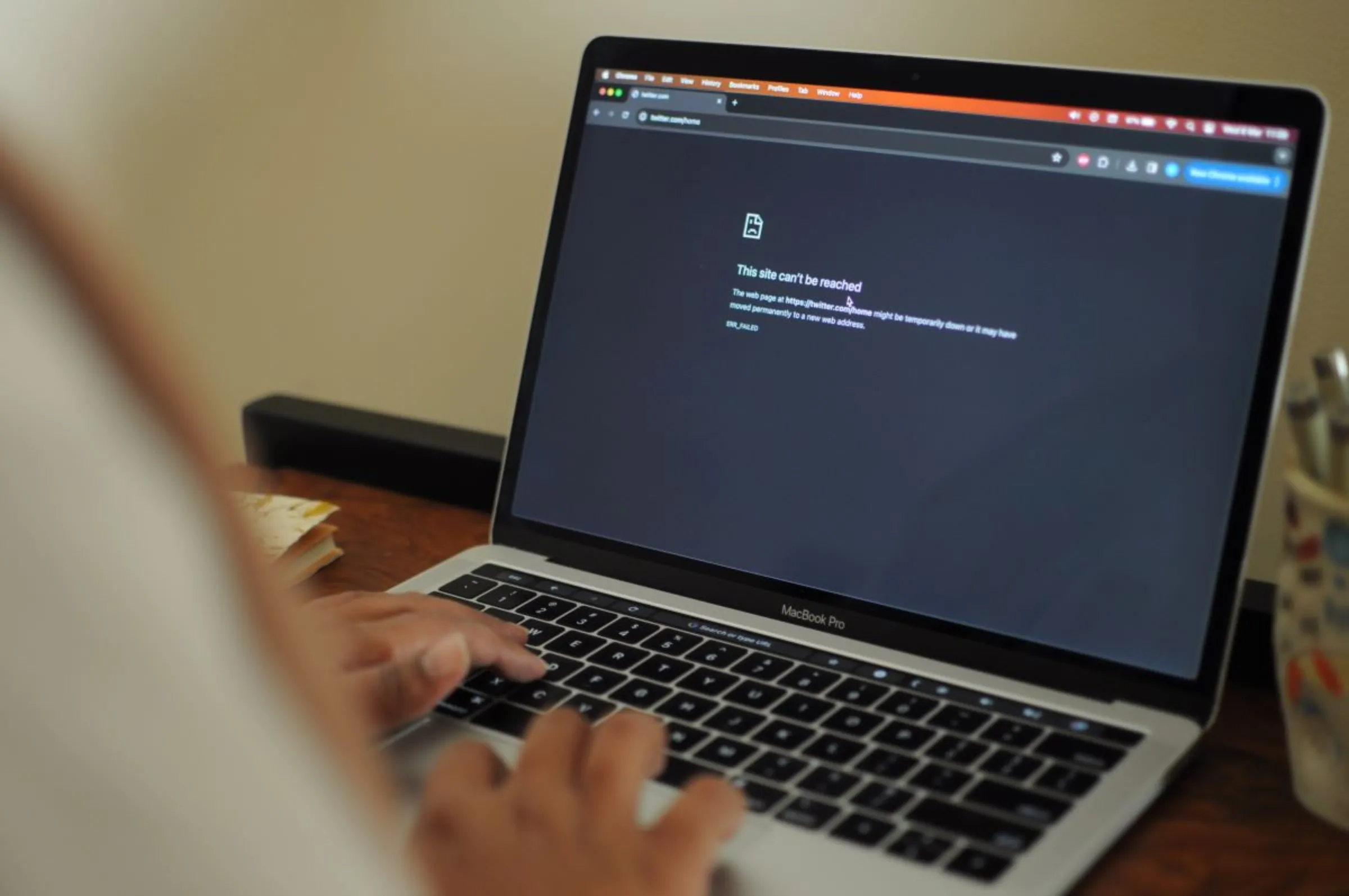

The Online Safety law, however, currently does not meet this threshold. Its vague definitions of “egregious content” risk overbroad enforcement - including censuring information that “advocates or instructs” content “likely to cause feelings” of racial or religious ill will or hostility, which can even include legitimate reporting or advocacy discussing race and religion online.

This risk of overbroad enforcement can be mitigated by including explicit provisions upholding freedom of expression within the law, and the incoming CPOS and CCSMS. As a next step, limitations on free expression must be strictly necessary and proportionate towards the legitimate aim of “protecting national security, public order, health or morals.”

Where methods which are least intrusive on the right to free expression can be employed, they should be, and where penalties can be so disproportionate as to render regulation ineffective, they should be revised.

In this respect, time limits imposed on companies to take content down should not be so disproportionate as to incentivise knee-jerk reactions to avoid penalties rather than substantially review content. In hearings on Britain’s Online Safety Bill 2021, Kaye cautioned against “perversely incentivising” companies through punitive timelines.

This is instructive for Singapore’s rules. They should not, for example, go down the routes of Vietnam or India which give platforms 24 hours, or Indonesia which gives them as little as four hours, to remove content deemed to violate national security. This is a task not only practically impossible in such populous countries, but which can facilitate administrative abuse of the narrative of security to curtail critical dissent.

As a third step, adequate independent oversight and remedial mechanisms are fundamental to ensure human rights-centric implementation. Australia’s Online Safety Act 2021 was criticised for not incorporating adequate oversight measures, transparent reporting on content take-downs, or an effective appeals process for content removal notices. Germany’s Network Enforcement Act 2017, similarly came under fire from the UN Human Rights Committee for failing to institute judicial oversight or access to redress, before amendments last year.

Singapore’s Online Safety law, CPOS, and CCSMS should therefore explicitly include independent and effective oversight mechanisms and institute transparent, public reporting regarding all take-down requests. The law’s current prescribed process for appealing a content removal decision is to submit such appeals to the minister, who has executive powers to determine regulations in the first place. This raises real risks of unfettered discretion by authorities charged with implementing the law.

In 2020, the Global Network Initiative - a coalition of industry, civil society, and academic experts - reviewed more than 20 content regulation initiatives across the world before concluding that a human rights-centric approach was best to help governments achieve more informed and effective outcomes which would not “infringe on their own commitments”.

Singapore’s efforts to protect online safety will only be strengthened by such guidance, and must embed human rights at their core.

Any views expressed in this opinion piece are those of the author and not of Context or the Thomson Reuters Foundation.

Tags

- Content moderation

- Tech regulation

- Social media

- Tech solutions

Go Deeper

Related

Latest on Context

- 1

- 2

- 3

- 4

- 5

- 6