Can tech protect US schools from mass shootings?

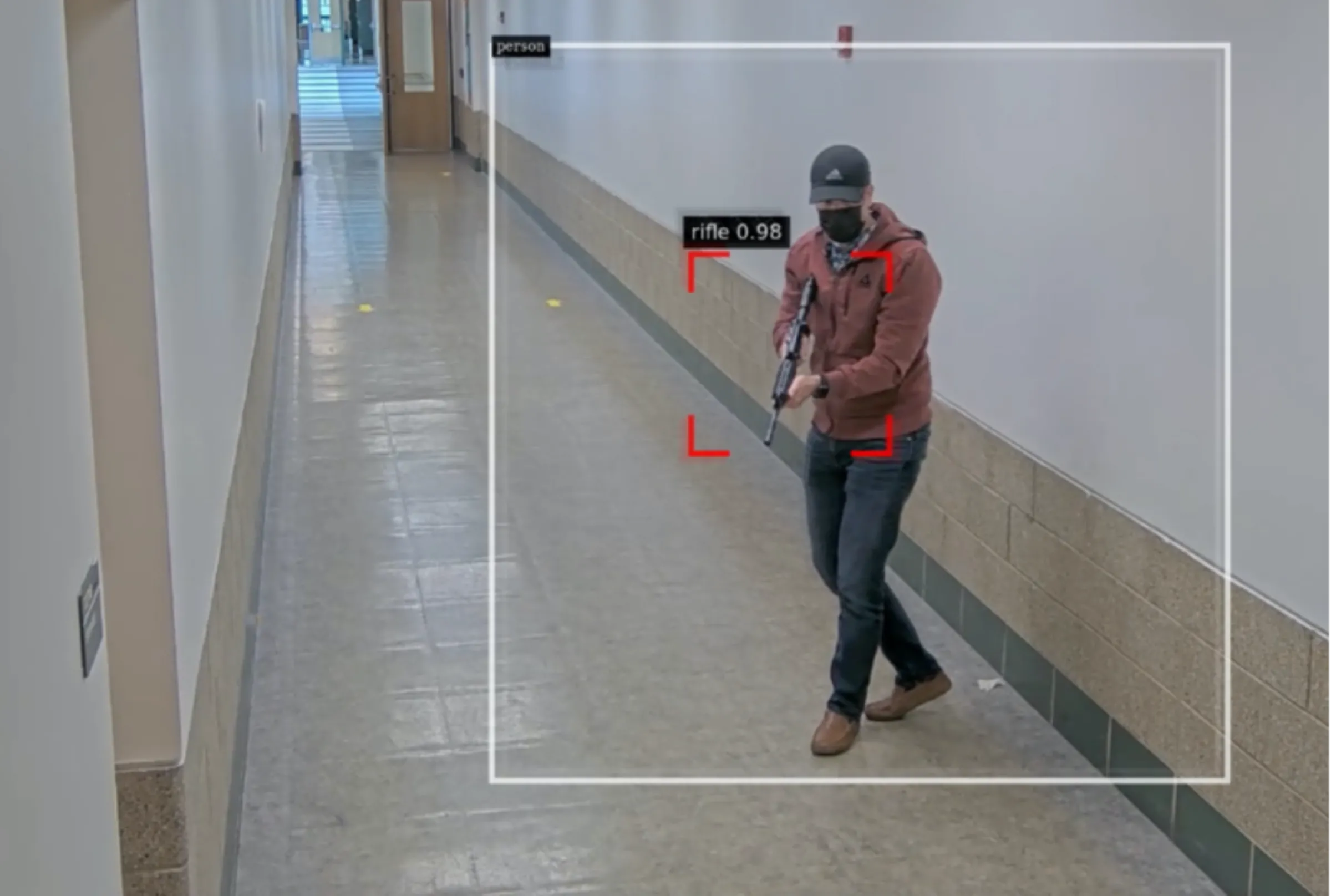

A demonstration of AI gun detection technology from Omnilert May 2, 2023. Handout via Thomson Reuters Foundation

What’s the context?

Demand for AI-driven security systems jumped after the Uvalde shooting, but concern is growing over ethics and effectiveness

A few days after a gunman killed 19 children and two teachers in Uvalde, Texas, a year ago, taser maker Axon Enterprise floated the idea of a "non-lethal" drone for schools that could be activated by AI-powered surveillance.

It caused a stir - prompting the company's own AI ethics advisory board to quit in protest and highlighting growing unease about the ethics and effectiveness of security tools being marketed aggressively by tech firms to U.S. schools.

"I had to have my secretary screen out the calls from all these companies," said Rita Bishop, former superintendent of the Roanoke, Virginia school system, recalling sales pitches for everything from drones to AI-powered surveillance cameras and weapons detectors.

But mass shootings like Uvalde's have fueled demand from schools for tech security systems, Dave Fraser, CEO of Omnilert, an AI-powered tool that hooks into school surveillance cameras to detect firearms on campus, told Context.

Schools have also been able to tap into nearly $200 billion in new COVID-19 relief funds and other new government funding streams to purchase such tools, said Odis Johnson Jr., director of the Johns Hopkins Center for Safe and Healthy Schools.

"More schools are awash in money," he said. "And there's a robust tech sector pushing these technologies."

Gun-detection systems

For some experts and school safety officials, new tech-based tools are just one part of a practical and multi-pronged approach to tackling school violence.

"We don't try to pretend we are the only solution at all, it's part of a layered approach," said Fraser from Omnilert.

At the Charles County Public Schools in Maryland, security director Jason Stoddard said the school system decided to install Omnilert on outwards-facing cameras because he noticed that the shooters in Uvalde and another attack in Parkland, Florida, had approached the campus with their guns drawn.

Omnilert is one of a growing list of firms offering gun-detection technologies.

ZeroEyes, a Philadelphia-based company, said its gun detection technology is deployed in schools, universities and other locations in over 30 states.

Like Omnilert, it has human reviewers who check guns flagged by its AI.

On its website, the company says its gun-detection system may have helped Sandy Hook Elementary School in Connecticut respond faster to a 2012 shooting in which 26 people were killed.

"We believe we could have helped first responders by providing situational awareness which would likely help them to find and contain the threat more quickly and efficiently, potentially saving lives," a spokesperson said in emailed comments.

According to a tally by the Intercept, more than 65 school districts have bought or tested AI gun detection from a variety of companies since 2018, spending a total of over $45 million, a small slice of an estimated $3 billion-a-year school safety industry.

There are serious questions, however, about the efficacy of such tools, said Ken Trump, the president of National School Safety and Security Services, a school safety consulting firm.

"Schools have become fertile ground for very underdeveloped AI software - they use schools as guinea pigs," he said.

He said educational establishments were increasingly drawn to flashy tech solutions at the expense of more basic measures such as training teachers on how to respond to shootings, making structural improvements to buildings, and keeping doors locked.

While surveillance tools might help accelerate a school's response, they are unlikely to deter shooters, said Johnson Jr. of John Hopkins.

"There is no peer-reviewed research on these AI technologies" to gauge their effectiveness, he added.

Detect and defend?

At the 37 schools he works to keep safe in Maryland, Stoddard said he tries to make investments in line with what he calls the "five Ds:" deter, detect, delay, deny and defend against a shooting.

The AI-powered cameras, he said, are part of "detecting," while other programs - such as training staff to be alert to certain behaviors - would serve a different function.

One of the fast-growing safety tech firms is Evolv, an AI-powered weapons detector system that has expanded from just 20 school buildings in 2021 to over 400 this year.

The firm claims to be able to identify concealed weapons as students walk into a building, without significant delays - using cameras, sensors, and AI to analyze people as they walk through the machines.

The company has been dogged by questions about the efficacy of its product, however.

Last year, the Utica School System in New York said it would be removing its $4 million Evolv system after a stabbing occurred on a school equipped with the Evolv system.

A spokesperson for Evolv said the company did not comment on particular customers, but that schools were a growing part of their business.

"Evolv's customer-base is comprised of and advised by many seasoned law enforcement and security professionals that are aware of the same concept that no system is perfect which is why layers of security are needed to help mitigate risk," they said in an emailed statement.

Classroom 'surveillance zone'

There are also ethical concerns.

"Kids need to be in schools that treat them like students, instead of suspects," said Johnson Jr.

He, and other experts, worry that surveillance solutions to school violence foster a hostile environment that is not conducive to learning, taking a particular toll on students from Black and other overpoliced communities.

"We should not be turning the classroom into a combat zone or a surveillance zone," said Michael Connor, executive director of Open MIC, a nonprofit that is urging Axon's shareholders to vote to abandon the drone plan in schools and other public places at a shareholder meeting next month.

Axon's board has recommended that shareholders vote against the proposal.

When the company's AI ethics board resigned last year, its members wrote in an open letter that the type of surveillance the drone program would require "will harm communities of color and others who are overpoliced."

An Axon spokesperson said in emailed comments that the school-based drone had not progressed beyond the concept stage, but the company was still exploring drones in other contexts.

In a national study of U.S. high schools published in 2022, Johnson Jr. found schools that relied most heavily on tech including weapons detectors and surveillance cameras had lower test scores and college attendance rates for all students.

Black students were overrepresented in those schools, and Johnson Jr. said that the surveillance likely creates a climate of distrust and suspicion.

Bishop, the former superintendent from Virginia, said she was skeptical that expensive new tech would make much of a difference in the long run.

"After a high-profile shooting, schools are desperate - we want to make parents feel good, but we're often grasping at straws," she said.

"A lot of new funding is going to a bunch of sexy stuff that will be part of the local landfill shortly."

(Reporting by Avi Asher-Schapiro; Editing by Helen Popper and Zoe Tabary)

Context is powered by the Thomson Reuters Foundation Newsroom.

Our Standards: Thomson Reuters Trust Principles

Tags

- Facial recognition

- Surveillance

- War and conflict