How leaked Facebook documents propelled social media lawsuits

Former Facebook employee and whistleblower Frances Haugen testifies during a Senate Committee on Commerce, Science, and Transportation hearing entitled 'Protecting Kids Online: Testimony from a Facebook Whistleblower' on Capitol Hill, in Washington, U.S., October 5, 2021. Drew Angerer/Pool via REUTERS

What’s the context?

Papers leaked by Frances Haugen are being cited as evidence as parents sue over their children's mental health crises

Dozens of child safety lawsuits filed in the United States over alleged social media harm draw heavily on a trove of internal documents leaked by former Facebook employee Frances Haugen in 2021.

The so-called Facebook Papers were a game-changer, lawyers and researchers say, because they showed that the company - now called Meta Platforms Inc - knew its Instagram app could adversely affect the mental health of teenage girls in particular.

Facebook has said the documents have been mischaracterized and taken out of context, and that they "conferred egregiously false motives to Facebook's leadership and employees."

Soon after the leak, the company released an annotated version of the documents, including numerous clarifications, and says it has implemented a slew of new features aimed at protecting young users.

Here are some of the issues highlighted in the papers shared by Haugen and how they propelled U.S. lawsuits:

Instagram and teenage girls

One set of the leaked documents that is cited in numerous lawsuits includes internal company research that sought to measure the impact of its Instagram product on young girls by using focus groups and user surveys.

A leaked 2020 slideshow summarizing the Instagram findings that was first reported in the Wall Street Journal included the phrase "We make body issues worse for 1 in 3 teen girls".

Facebook later said that slide only applied to a subset of girl users who had reported prior problems.

Another leaked slide in the same presentation said 13% of girls reported an increase in their self-harm impulse and suicidal thoughts after using Instagram. Meta later cited research showing a lower figure.

Foreknowledge of harms?

The research slides are cited extensively as evidence in a number of the lawsuits filed in the United States.

They include the case of a 13-year-old girl from Colorado who struggled with suicidal thoughts, an 18-year-old from Alabama who needed medical care for her disorders, and a lawsuit brought by the Seattle School District seeking funds to help it address mental health crises among its students.

In the Colorado suit for example, the documents are cited to argue that "Meta was aware (its) products cause significant harm to its users, especially children."

In light of that, it says the company should have warned parents, or made design changes to minimize the risks.

Facebook says it has introduced a number of features aimed at protecting children, such as removing certain topics from in-app searches and restricting messaging between adults and under-18s.

Thomson Reuters Foundation/Tereza Astilean

Thomson Reuters Foundation/Tereza Astilean

Child safety features

Some of the key leaked Meta documents show discussions within the company about safety-related changes, such as including warnings about excess screen time and letting users filter out content they do not want to see.

Meta has said that some child-safety features - including anti-bullying tools built into Instagram - were rolled out before the documents came to light. Others, like a Family Center hub for parents to better control their childrens online actvity, were introduced after the leaks.

The lawsuits say the fact such issues were considered, without swift corresponding action, show the companies did not do enough to protect minors.

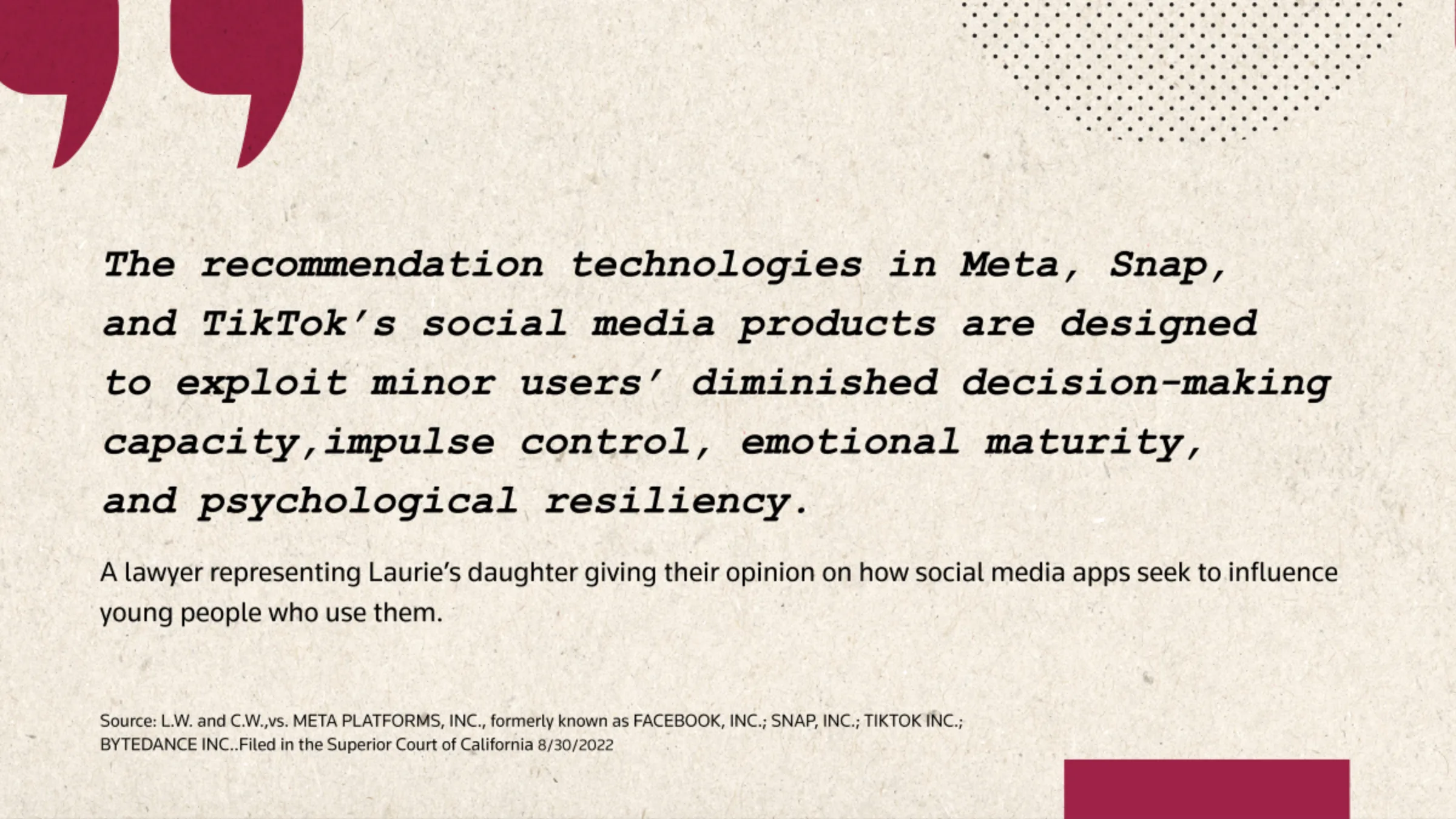

In a lawsuit against Meta filed by a woman whose teenage daughter was groomed by predatory older users online, her lawyers point to an internal Facebook discussion board in which employees expressed concerns about how the friend recommendation system could be abused by adults to contact children.

In a document showing the discussion, a Facebook researcher estimates that 70% of the reported "adult/minor exploitation", on the platform could be traced back to recommendations made through the "People You May Know" feature.

Another Facebook worker suggests in the same message board that the tool should be disabled for children.

How Facebook has responded

Since the documents became public, Facebook has made a number of changes, including removing children's accounts from friend recommendations made to adults.

Senior Facebook executives have repeatedly rejected the criticisms based on the leaked documents and defended the company's record on safeguarding teenage users.

"Right now, young people tell us - eight out of 10 tell us - they have a neutral or positive experience on our app," Facebook's global head of safety, Antigone Davis, said when testifying before the U.S. Congress in 2021.

"We want that to be 10 out of 10. If there is someone struggling on our platform, we want to build product changes to improve that experience and help support that."

Facebook cites its numerous measures to protect children, including defaulting users under the age of 16 to private accounts when they join Instagram and a series of parental supervision tools helping parents check their children's interactions.

(Editing by Helen Popper.)

Context is powered by the Thomson Reuters Foundation Newsroom.

Our Standards: Thomson Reuters Trust Principles

Tags

- Online radicalisation

- Content moderation

- Facebook

- Tech and inequality

- Instagram

- Tech regulation

- Meta

- Social media

- Corporate responsibility

- Cyberspace